Governance of AI Agents has become increasingly important as autonomous systems take on complex roles in real-world settings. Regulated autonomy provides both a theoretical and practical approach to maintaining accountability and oversight.

In critical sectors like Oil & Gas, autonomous AI agents hold vast potential to drive optimization and boost efficiency, while enabling new operational capabilities. Establishing a strong governance framework is essential to guide their autonomy, especially in high-risk environments where precision and control are vital.

As the use of artificial intelligence grows in organizations, AI governance becomes an urgent issue, especially in a growing context of AI assistants and agents (see previous post on data governance and AI).

While GenAI tools initially created content, made predictions, or provided information in response to human input, agents can now explore the world and perform complex tasks autonomously. Furthermore, they can make decisions on the fly and adapt to changing conditions. This poses entirely new challenges for AI governance.

Now, what do we mean by AI governance? Artificial intelligence governance refers to the processes, standards, and barriers that help ensure the safety and ethics of AI systems and tools. AI governance frameworks guide AI research, development, and application to ensure safety, fairness, and respect for human rights.

With regard to agentic AI, governance frameworks will need to be updated to account for its autonomy. The economic potential of agents is enormous, but so is the associated risk landscape. Encouraging intelligent systems to operate more safely, ethically, and transparently will be a growing concern as they become more autonomous.

And automated agents exist everywhere: in customer service, financial services, logistics planning, and predictive maintenance and anomaly detection, to mention a few areas.

1. How to Integrate Agent Behavior with Business Needs

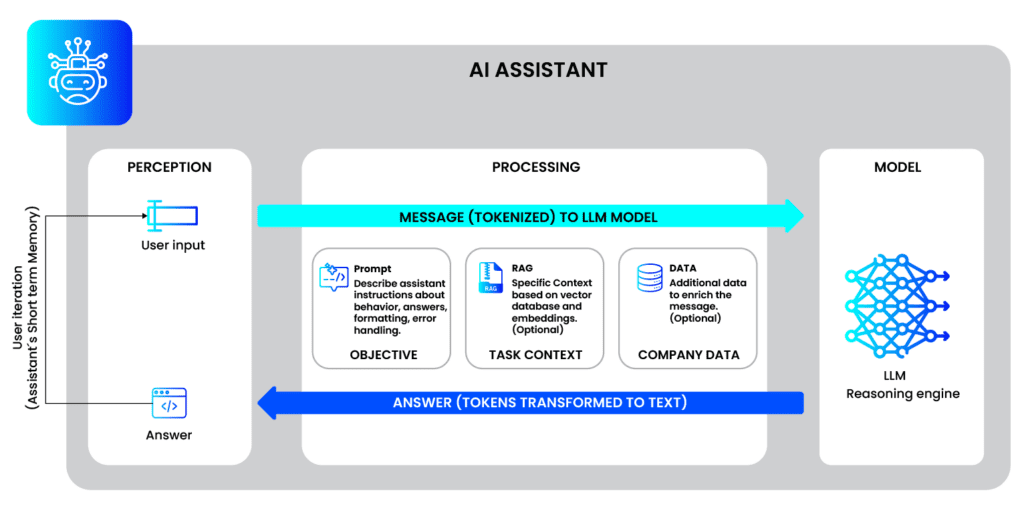

AI agent behavior in business refers to the ability of AI systems to operate with a certain degree of autonomy, make decisions, initiate actions, and interact with other systems or humans, usually in pursuit of defined goals.

In a business environment, this means that agents can optimize workflows, negotiate, recommend actions, or even, if necessary, execute them.

For example, in the Oil and Gas sector, the convergence of predictive maintenance and anomaly detection with artificial intelligence and machine learning is sparking a technological revolution in industrial management. Large Language Models (LLMs) contribute to the classification of enterprise texts and data, report interpretation, and rapid real-time queries: they can answer expert oil knowledge queries regarding extraction, production, and projections of future resource exploitation, prevent potential failures or anomalies, and assist in an emergency response plan.

However, the entire behavior of these models is determined by the data used to train them, which must be high-quality and fully audited, as well as by the algorithms, which evolve and become increasingly dynamic, as they can learn on the fly from their own actions.

Currently, many AI agents, especially the most advanced agents powered by machine learning, perform decision-making processes that are not easy for humans to interpret. Unlike rule-based systems with traceable logic, machine learning models make decisions based on complex patterns in the data that, in some cases, even their developers cannot fully understand. This opacity makes it difficult to audit AI-driven decisions, which poses a traceability challenge in constantly evolving automation use cases.

The key question is, how do we ensure that the behavior of automated agents is aligned with the behavior of the organization?

There are three fundamental aspects that make up the core of the company: goals, values, and legal requirements. In relation to this topic, three key risks emerge that need to be addressed and minimized:

- Misaligned Decisions

Let’s suppose an AI agent is designed to optimize and increase oil and gas production. However, the company where it operates has among its core objectives reducing the environmental impact of drilling equipment and early detection of pipeline leaks.

This assistant may be making the best recommendations at a cost-benefit or profitability level for the company, but during its training, it failed to consider using natural gas instead of diesel in drilling equipment (which reduces CO2 emissions by 50%) because it only considered economic, not environmental, criteria.

This is an example where the agent’s optimization objective was not correct because it was not fully aligned with the company’s priorities.

- Lack of Transparency

LLMs, such as ChatGPT, can be used to access industry knowledge bases, such as operations manuals and safety procedures, and recommend decision-making in the specific context of oil and gas. Generative AI can be used to retain and transfer expert knowledge, creating digital tools that answer queries on complex topics, such as formation damage (any process that reduces an oil or gas reservoir’s ability to efficiently produce fluids). This facilitates informed decision-making, especially for less experienced professionals.

However, if we don’t understand the mechanism by which the LLM arrives at this decision-making recommendation, and we completely delegate that understanding to an agent, the decision (which could be wrong) will be based on a complex neural network model with limited explainability.

Imagine a neural network that needs to be explained to stocks or investors who don’t have a deep understanding of the subject, or we have to do it through mathematical explanations. It will be truly complex to convince someone to invest in one asset or another.

This lack of transparency can lead to a problem of commitment to the company’s values and an erosion of trust with customers, shareholders, and regulators.

- Security and Regulatory Compliance

In this case, it is important to safeguard the security of the data and the infrastructure with which our agents operate, ensuring good regulatory compliance policies.

Today, LLMs and chatbots that communicate with users in natural language can be tricked into generating harmful content. The decentralized deployment of AI agents makes it difficult to implement uniform security measures.

Agent systems often rely on APIs to integrate with external applications and data sources. Poorly managed APIs can expose vulnerabilities, making them targets for cyberattacks. Cybersecurity risks include adversarial attacks, data leaks, and unauthorized access that exposes sensitive information. To mitigate these risks, APIs must have access controls and authentication mechanisms to prevent unauthorized interactions.

In addition to security, organizations must also comply with regulations when designing AI agents to prevent potential risks. Instead of simply testing models before deployment, organizations can create simulated environments where AI agents can make decisions without real-world consequences before full deployment and monitor them fully.

2. Key Dimensions of AI Agent Governance

There are currently at least four key dimensions when we talk about governance:

A) Transparency: This means that both technical and commercial teams can understand how the AI agent works. This will ensure traceability, because we have the ability to track inputs, decisions, and actions (outputs). It also ensures explainability, because we have the ability to explain why the agent chose a particular action in relation to the organization’s policies.

Returning to the Oil and Gas case, let’s suppose an AI agent has to decide how to react to a pumping leak. In that case, it already has structured steps that are carried out under an emergency response plan and with constant supervision by trained (human) personnel.

B) Accountability: A clear assignment of roles is required (who designs, who monitors, who validates, etc.). AI agents do not exist in the abstract; they are designed, implemented, and managed by people. If we are clear about this evident accountability, this responsibility to the organization—that is, defining who is responsible for each stage of AI agents—we will achieve adequate governance, from initial design to ongoing monitoring.

For example, data scientists may be responsible for model accuracy, data engineers for architecture, legal teams for compliance, and operations teams for monitoring the behavior of AI agents in the real world.

C) Continuous auditing: It’s not just about auditing every quarter, every semester, or once a year. It must be an ongoing process that requires the company to have dashboards with logs, bias reviews, performance, and process monitoring, just to mention a few items.

Continuous recording of these agent actions allows for model traceability and is an important aspect for our internal analysis: we have periodic reviews that assess performance degradation and, if urgently necessary, can deactivate some of our agents. Since biases or behavioral deviations may arise, even agents make decisions that harm small groups of people (whether customers or internal areas of the company) or demonstrate a lack of ethics in their decision-making, these tools can provide feedback and try to keep all AI agents aligned with corporate objectives.

D) Human-in-the-loop/override: In line with the above, there should be a human in the loop or override, because even autonomous agents could operate under human supervision.

With the human in the loop, the loop mechanisms ensure that humans can intervene when agents encounter new situations or high-risk decisions so that the human can correct the behavior or override the agent’s policy or actions. Governance policies should clearly define routes for escalating the problem: if something is broken or if something fails, who will take charge, which human in the organization will be responsible or take charge of the action. And when we should pause an agent, a decision that requires human approval.

3. How to implement AI governance within the organization

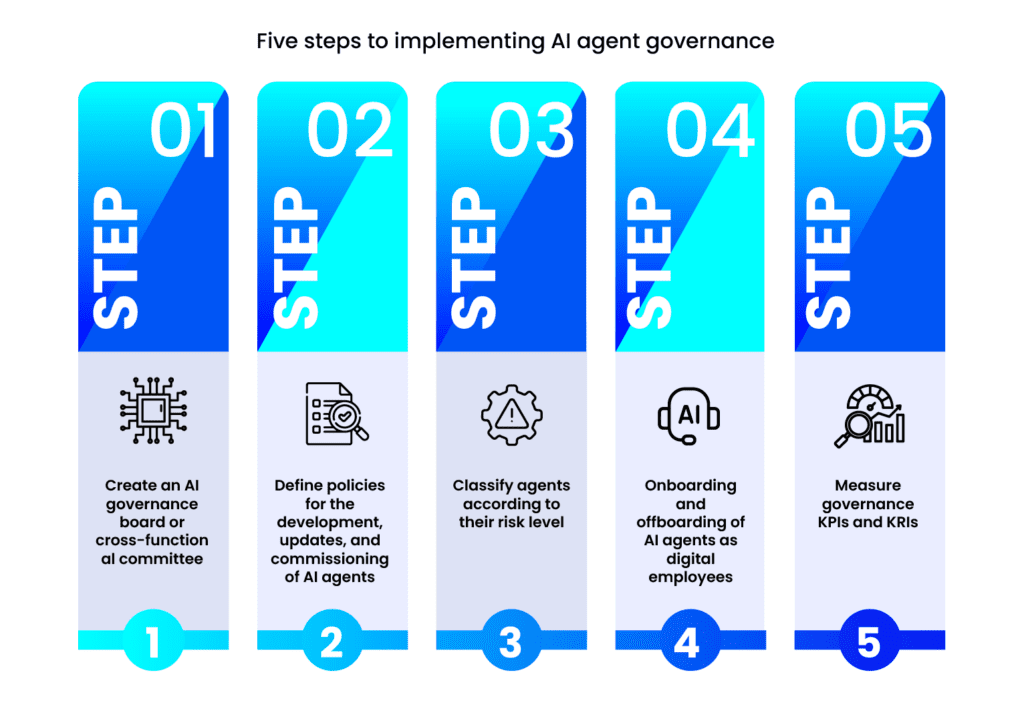

Currently, there are at least five steps to implementing AI agent governance.

1) Create an AI governance board or cross-functional committee (composed of areas such as IT, Legal, Human Resources, Business, Compliance, Ethics, etc.).

2) Define policies for the development, updates, and commissioning of AI agents. This means that agents should not be deployed without policies that cover the entire software lifecycle and always manage the version updates necessary for proper operation.

3) Classify agents according to their risk level. A formal catalog of all agents in the organization must be classified by at least three aspects: autonomy, criticality, and risk exposure. These three variables need to be classified for AI agents, and will allow for differentiated governance.

4) Onboarding and offboarding of AI agents as digital employees. Agents will be monitored specifically as if they were digital employees, and their integration or disengagement from the company’s processes will be approved.

5) Measure governance KPIs and KRIs: trust, autonomy, efficiency, and incidents related to AI agents.

Closing Thoughts

As agent AI systems become more autonomous, ensuring their safe and ethical operation becomes a growing challenge. Organizations must adopt scalable governance models, implement robust technology infrastructure and risk management protocols, and integrate human oversight. If organizations, especially in Oil & Gas, can scale agent systems securely, they can realize virtually unlimited value.

Strategic recommendations include:

- Governing AI is governing the business: AI agents must always be aligned with the company, its strategic objectives, and corporate values.

- Start with governance pilot projects, and in high-impact areas: Before attempting to implement comprehensive AI governance across the entire organization from day one, a more effective approach is to launch governance pilot projects in specific high-impact areas, such as agents that optimize hydrocarbon extraction and production operations.

- Importance of documentation, training, and technical auditing from day one: Successful governance begins with a strong foundation. Although this documentation is often tedious for engineers, it’s important to consider the agent’s design behavior and limitations, ensuring it’s well documented and audited.

And remember, «an agent without governance isn’t intelligent; it’s uncontrollable. In the new era of agentic AI, governance isn’t an obstacle to innovation. It’s the key to making AI both powerful and safe«.